Hindsight > foresight > insight?

Theories of change and foresight

A few weeks ago, I wrote a response to Thomas Dunmore Rodriguez’s blog on whether theories of change are still useful? in the international development sector.

While there’s little doubt theories of change have been frequently misused and abused, I argued that when we use theories of change to critically examine our hypotheses and assumptions, they remain very useful. I suggested that they can be most useful when we: 1) set clear boundaries, 2) are problem-driven, 3) are evidence-based, 4) are explicit about testing our assumptions, and 5) review key areas of focus regularly.

One speculative recommendation I made was to explore potential synergies between theories of change and scenario planning. This was a task Rick Davies addressed in a blog of his own, looking at potential synergies between ParEvo and theories of change. What ParEvo offers is a focus on alternative narratives, and then assessing their desirability and probability.

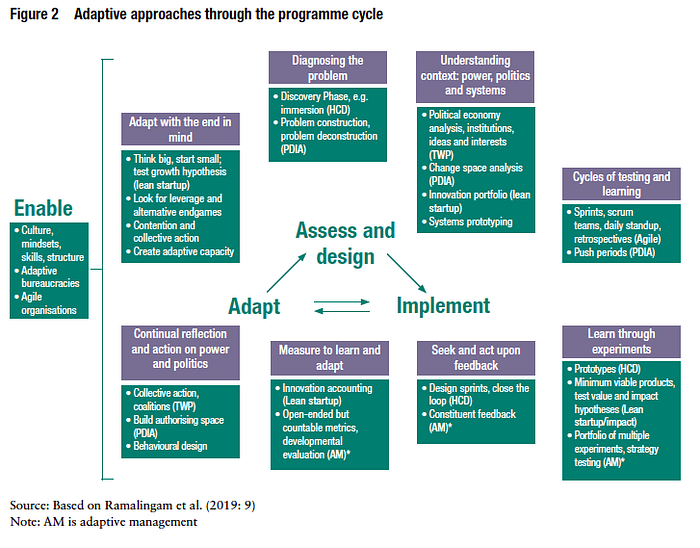

In a similar vein, this weekend, Jewlya Lynn argued that foresight practice should be in your evaluation toolkit. Her reasoning was that at the heart of theories of change are predictions about the future. I’m usually suspicious of the somewhat solutionist pitches from “lean startup,” “agile,” and “human centred design.” However, as Jaime Pett points out, while the terminology and spin differs between approaches, there are some evident synergies with what the development sector calls “adaptive management,” as you can see below:

Making bets about the future

Another area of work which complements both theories of change and foresight is around decision-making. Lynn rightly asserts that one area where theories of change are constrained is that they tend to be about “one possible, relatively narrow pathway into one possible future.” Some recent efforts have been made to develop adaptive log frames and to discuss “evolving theories of change,” but Lynn seems correct to argue that there is generally still too much faith in a single scenario and a single future.

I’ve read a few books on decision-making recently, and two things in particular resonated with me. The first of these was thinking about the likelihood (or probability) of your desired pathway coming true. The second was about how important it is to reflect on our assumptions and biases (a pet agenda of mine).

Annie Duke, a behavioural scientist and former professional poker player, recently penned the book How to decide: Simple tools for making better choices. It actually does provide simple tools for making better choices, and a number of these tools are highly relevant to evaluators. The book shares a great deal in common with Steven Johnson’s book Farsighted: How we make decisions that matter the most. Both of these (and Duke’s previous Thinking in Bets) are accessible airport reading material, but are actually pretty thoughtful.

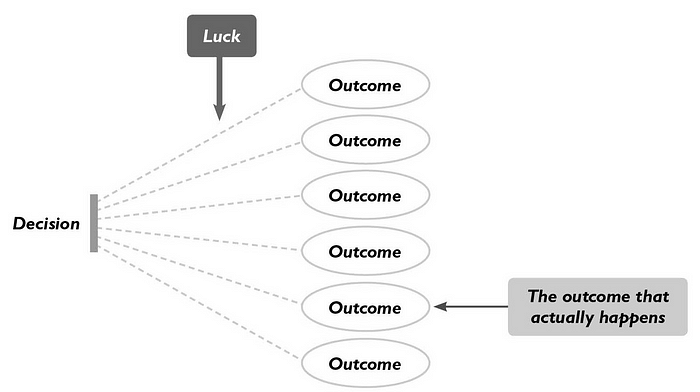

Duke suggests that all our decisions are really bets about the future. She points out that the outcome of any decision is one of many potential outcomes, and notes that luck will often come into play, whether we choose to acknowledge this or not.

Challenging biases

For me, the most compelling part of Duke’s book comes from her background as a behavioural scientist, and her emphasis on how cognitive biases shape the bets we make about the future. In a previous blog, I argued that we need to be more explicit about our assumptions and analyse these more critically. In my view, evaluators and project managers both need to take cognitive biases more seriously.

We assume, more often than not, when there is a good outcome that this is because we made a good decision (i.e. our strategy was good) rather than because of good or bad luck. As Duke highlights, we therefore tend to overstate how clever we think we are when things go right. What we might label a rose tinted spectacle hindsight bias.

This reminds me of a recent article I read on whether Coronavirus is Catalysing new Civic Collaboration for Open Government, where one interviewee affirmed that “the COVID-19 crisis has validated SPARK’s approach to working with large, broad-based civic organizations and social movements.” I leave it to the reader to decide how much we can put this down to luck or foresight.

Either way, the question is: what could you have known beforehand? And how likely was it that your anticipated outcome would materialise from your decision (or strategy)? When we employ system 2 thinking (slow, deliberative, logical) rather than system 1 thinking (fast, instinctive emotional), whether consciously or not, we generally weigh up preferences, payoffs, and probabilities.

Our normative preferences (what we think is right) and hindsight tend to anchor our decisions. As Duke notes, people have a tendency to pick one “anchor variable” and make their decision based on that element. In other words, we take shortcuts to forground our preferences, and tend to judge these at a lower standard than alternatives that seem less desirable to us. For this reason, both Duke and Johnson criticise pros and cons lists, because these fail to account for how we weight our preferences. We’re also kinder to ourselves in judging the quality of our decisions than we are to the decisions of others. So, Duke suggests it’s helpful to benchmark yourselves against others. If everyone else has failed at this before, why are you so confident you will succeed now?

As Chris Argyris points out, cognitive biases are also accompanied by “defensive reasoning” which, he argues, is designed to “protects us from learning about the validity of our reasoning.” Often, we just don’t want to know because we don’t want to be proved wrong. It’s therefore important to have conversations with others to walk through your reasoning, because as Peter Senge notes, “in dialogue, people become observers of their own thinking.” This is why theory of change workshops can be so helpful. However, particularly when we don’t question our assumptions, dialogue can also become group think. Argyris suggests that “we are carriers of defensive routines, and organisations are the hosts. Once organisations have become infected, they too become carriers.”

You therefore need to create checks and balances to counteract your defensive reasoning. You need to explicitly search for contradictory evidence. Try to disprove your hypothesis. Try to challenge your assumptions and think about how likely it is that your assumptions won’t hold. I’d argue that the more seriously you consider such checks and balances, the more likely you will get to insight and make better decisions.